Can’t decide between a LiDAR or camera robot vacuum? You’re not alone. Many homeowners struggle with poor navigation, missed spots, or robots that get stuck in dark corners or tangled in cords. The right sensor system changes everything—from better cleaning paths to fewer interruptions.

In this guide, you’ll learn how LiDAR and camera navigation work, how they compare in robot vacuums and self-driving cars, and which Narwal model suits your space. We’ll also break down cost, complexity, and real-world performance—so you can choose smart and clean smarter.

LiDAR vs Camera: What’s the Difference?

LiDAR is an active sensor that emits laser pulses to measure distance and create 3D spatial maps. Camera vision is passive—it captures visible light to form 2D images. This fundamental difference affects how each system handles light, depth, and navigation.

In short: LiDAR works in the dark and offers native depth perception, while cameras need good lighting and use AI to estimate depth. Let’s look at how each works in practice.

How LiDAR and Camera Sensors Work

LiDAR (Light Detection and Ranging) sends out rapid laser pulses. When these pulses bounce off objects and return, the sensor calculates distance using the time-of-flight method. The result is a highly accurate 3D map that shows the shape and position of objects. This system works regardless of ambient light and is widely used in robotics, autonomous cars, and smart vacuums.

Camera vision uses optical sensors to collect visible light and convert it into 2D pixel-based images. These images can include colors, textures, and patterns, which are processed to recognize objects and surfaces. However, cameras depend on ambient lighting and don’t provide built-in depth—making them less reliable in dark or reflective spaces.

LiDAR vs Camera: Pros and Cons Comparison

|

Dimension |

Camera |

LiDAR |

|

Light Dependency |

Needs ambient light |

Works in the dark |

|

Output Type |

2D images (color, texture) |

3D point cloud (spatial distance, shape) |

|

Depth Perception |

Requires AI estimation |

Native depth with centimeter accuracy |

|

Night Vision |

Limited |

Strong |

|

Cost & Power |

Low cost, low power |

Higher cost, more processing power |

In complex or changing environments, LiDAR offers better precision and reliability, especially in low light. Cameras are cost-effective and work well where lighting is stable and visual details matter. Many advanced systems today combine both to balance mapping accuracy and object recognition.

LiDAR vs Camera in Robot Vacuums: What They Do and How They Differ

Robot vacuums rely on sensors to move around the home, understand the environment, and clean efficiently. Two of the most common technologies used for navigation are LiDAR and camera vision. Each plays a different role and has different strengths.

LiDAR in Robot Vacuums

LiDAR (Light Detection and Ranging) is a laser-based sensor that measures the distance between the vacuum and objects in the room. By rotating and scanning all around, it creates a 3D map of the space. This allows the vacuum to plan efficient cleaning paths, avoid collisions, and work in both light and dark environments.

Camera in Robot Vacuums

Camera-based systems use visual data to understand surroundings. They recognize features like walls, furniture, and doorways by analyzing images. Some systems can identify object types such as cords, shoes, or pet waste. However, they require good lighting to function well and may not work accurately in dark or shadowed areas.

Key Differences Between LiDAR and Camera in Robot Vacuum

|

Feature |

LiDAR |

Camera |

|

Yes |

No (needs good lighting) |

|

|

Mapping accuracy |

High (precise 3D spatial data) |

Medium (depends on visual details) |

|

Object recognition |

Limited (detects shape/distance) |

High (can classify object types) |

|

Use cases |

Suitable for all layouts and lighting |

Best in small, well-lit environments |

-

LiDAR offers fast and reliable navigation, especially in large homes or rooms with limited lighting. It helps the vacuum stay accurate and avoid unnecessary repeats.

-

Cameras help the robot understand what it’s seeing. This is useful when avoiding smaller or unexpected objects.

-

High-end models often combine both systems to achieve better performance. LiDAR handles structure and layout; the camera helps with specific object detection.

If you want strong mapping, stable navigation, and low-maintenance cleaning, choose a vacuum with LiDAR. If your home has many small obstacles, a camera-supported system adds helpful object recognition.

Now that we’ve seen how LiDAR and camera systems differ in navigation, mapping, and object handling, let’s look at how Narwal applies these technologies across its latest robot vacuum models—and which one might be right for your home.

Which Narwal Robot Vacuum with LiDAR Is Right for You?

Narwal offers several LiDAR-powered robot vacuums, each built for different home environments and cleaning needs. Below is a simplified overview of four top models to help you find the best match for your household.

Narwal Flow — Fully Automated Flagship

Best for: Large homes, premium users who want a hands-free experience

Highlights:

-

22,000Pa suction with deep carpet cleaning performance

-

Real-time mop self-cleaning with 113°F warm water and 12N pressure

-

Works with Matter, Alexa, Siri, and Google

-

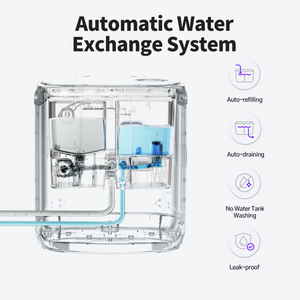

Full automation: hot water sterilization, auto water refill, 120-day dust storage

[cta:flow-robot-vacuum-and-mop]

Narwal Freo Z10 Ultra — Intelligent Object Avoidance + Edge Cleaning

Best for: Homes with pets, tight spaces, or lots of small objects

Highlights:

-

EdgeReach™ triangular mop cleans deep into corners

-

Detects and avoids over 200 obstacles, including pet waste and cords

-

Automatically identifies mess types and adjusts cleaning mode

-

Supports high-temperature mop washing and self-emptying

[cta:narwal-freo-z10-ultra-robot-vacuum-mop]

Narwal Freo Pro — Anti-Tangle Cleaning for Pet Owners

Best for: Households with long hair or shedding pets

Highlights:

-

SGS-certified anti-tangle brush system

-

AI DirtSense detects dirt levels and re-cleans until spotless

-

Optimized for mixed flooring and pet messes

[cta:narwal-freo-pro-robot-vacuum-mop]

Narwal Freo Z10 — Reliable Core Model for Everyday Use

Best for: Small apartments, budget-conscious users

Highlights:

-

Fast LiDAR 4.0 mapping (under 8 minutes)

-

MopExtend™ improves edge coverage

-

A great entry point for first-time robot vacuum users

[cta:narwal-freo-z10-robot-vacuum-mop]

Quick Comparison

|

Model |

Ideal For |

Obstacle Avoidance |

Mop Cleaning |

Automation Level |

|

Large homes, full automation |

AI-powered, 200+ objects |

Real-time, warm water |

Full (auto everything + Matter) |

|

|

Pet homes, detailed cleaning |

Smart AI avoidance |

High-temp auto wash |

High |

|

|

Hair/pet-heavy households |

Structured light + tangle-free |

Dirt-sensing rewash |

Medium-high |

|

|

Small homes, first-time buyers |

Standard LiDAR |

Manual rinse |

Basic |

If you want the most effortless experience, choose Flow or Z10 Ultra. For pet-related messes, Freo Pro provides strong anti-tangle and targeted cleaning. Freo Z10 offers smart navigation and solid performance at an accessible price.

What Is Best in Self Driving Cars? LiDAR vs Camera

Autonomous vehicles need to do more than just see. They must recognize signs, detect lane boundaries, judge distances, and respond quickly to obstacles. Both LiDAR and camera vision contribute to these tasks, but in different ways.

Camera vision is good at reading traffic lights, lane markings, and road signs.

It captures color and detail, which helps the vehicle understand traffic rules and follow road cues. However, cameras rely on ambient light. Their performance can drop in dark environments, in glare, or when weather conditions change suddenly.

LiDAR does not need external light to work.

It can detect precise distances and shapes of vehicles, people, and road edges even at night or in tunnels. It gives the system strong spatial awareness, which is hard to match with vision alone.

Some companies are developing vision-only approaches. These rely on advanced machine learning and massive data to replace depth sensing with intelligent prediction. While promising in the long term, such systems still face challenges in handling complex or unpredictable conditions.

For now, combining LiDAR, camera, and radar remains the most reliable setup for safe self-driving.

LiDAR vs Camera: Cost and System Complexity

LiDAR and camera vision differ not only in how they work but also in what they cost and how complex they are to implement. From hardware pricing to software requirements, these factors can greatly affect which sensor is the better fit.

Sensor Hardware Pricing and Installation Factors

Camera systems are usually less expensive. They use small image sensors that are mass-produced and easy to install. Most only need basic power and data connections, which makes setup fast and simple.

LiDAR sensors cost more. Although prices have dropped, especially for 2D models, high-quality 3D LiDAR is still much more expensive than cameras. These sensors are often larger and more sensitive to how and where they are mounted. Installation may take more time and require careful alignment to avoid errors.

Data Processing Demands and Software Dependencies

LiDAR creates large amounts of data. To use it in real time, the system needs strong processing power and memory. This can be a challenge for low-power or compact devices.

Camera vision creates smaller files but needs more analysis. Systems must use computer vision and AI models to detect objects, understand depth, and track movement. This adds software complexity, even if the data is lighter.

Camera systems often work with common tools like OpenCV or TensorFlow. LiDAR may need special software to read and process 3D point clouds. These extra tools can make the system harder to maintain or scale.

Radar vs LiDAR vs Camera vs Ultrasonic

In most advanced systems, no single sensor is enough. Combining radar, LiDAR, cameras, and ultrasonic sensors allows machines to understand both what is around them and how far away it is. This multi-layered approach offers better safety, stability, and performance in real-world conditions.

|

Sensor Type |

Main Strength |

Limitations |

Best Use Cases |

|

Radar |

Works in fog, rain, and darkness |

Low resolution, limited object shape detection |

Speed detection, long-range obstacle sensing |

|

LiDAR |

High-precision 3D mapping |

Affected by weather, high cost, higher power usage |

Navigation, mapping, real-time object detection |

|

Camera |

Rich visual detail, color, text recognition |

Sensitive to light conditions, no native depth sensing |

Traffic signs, lane detection, object classification |

|

Ultrasonic |

Simple, low-cost, reliable at close range |

Very limited range and no detailed spatial data |

Parking assist, proximity detection |

What Makes LiDAR Better for Multi-Room Cleaning?

LiDAR enables robot vacuums to create precise maps of your entire home, including multiple rooms and hallways. This allows the robot to plan efficient routes, remember layouts, and resume cleaning from exactly where it left off—ideal for larger or multi-room homes.

Do Camera-Based Robot Vacuums Struggle on Black or Reflective Floors?

Yes. Many camera-only vacuums rely on visible light and pattern recognition, which may fail on dark or glossy surfaces. These floors can confuse visual sensors, causing missed areas or navigation errors. LiDAR does not have this problem, as it measures distance, not color or brightness.

Is LiDAR Navigation Safer Around Stairs and Edges?

While both camera and LiDAR vacuums often include cliff sensors, LiDAR-based models tend to pair this with more accurate mapping. This allows for safer boundary detection and the ability to set precise no-go zones near stairs or drop-offs using the app.

Which System Reacts Faster to Sudden Obstacles Like Pets?

Camera-based systems with AI object recognition may detect specific shapes like pets or cords. However, LiDAR-based systems typically react faster in low light or cluttered spaces because they continuously scan distance data in real-time. Top models combine both for faster and smarter reactions.

Do I Need Both LiDAR and Camera in a Robot Vacuum?

Not necessarily. If your main concerns are mapping accuracy, reliable coverage, and dark-room cleaning, LiDAR is often enough. If you want your robot to identify object types (like pet waste or socks), then a hybrid model with both LiDAR and cameras provides the best results.

Choose LiDAR and Camera Based on What the System Needs

Choosing between LiDAR and camera vision is not about declaring one better than the other. It is about knowing how each sensor fits into a broader system, and how their strengths can complement each other.

As more products adopt hybrid approaches, we see a clear shift toward balanced design. Narwal has already started combining LiDAR and camera vision in flexible ways, adapting sensor choices to the task rather than following a one-size-fits-all model. This mindset is shaping the next generation of smart, context-aware machines.